Alfonso Casani – FUNCI

Spanish newspaper La Vanguardia recently reported the results of an experiment to measure the possibilities of artificial intelligence to make moral judgments, which ultimately ended up showing the machine’s racist and misogynist bias. The experiment is a paradigmatic case that highlights the problem of the conditionality of the knowledge on which artificial intelligence relies. If we are racist, so will be our machines.

In simple terms, artificial intelligence refers to the learning and development capacity of machines and their processors, with the aim of learning from the knowledge and databases provided and issuing autonomous responses that are capable of adapting to the environment. As the European Parliament itself explains, the aim is for machines to be able to reason, learn, create and plan their actions.

The experiment reported by the Spanish newspaper analyzed the responses of a computer to different situations and behaviors, based on the knowledge acquired in virtual networks and forums. To the surprise of the scientists involved, the responses offered by the machine reflected racist behavior (a mistrust of the black population, for example) and sexist behavior (for instance, considering it correct to kiss a woman even though she had expressed her rejection).

The experiment highlights one of the great problems of artificial intelligence: its system needs to rely on databases collected by humans, which implicitly transmit many of the judgments (and prejudices) of its designers. As the article in La Vanguardia concludes, in this case, the machine merely offers “a condensation of our behavior as a digital society”.

White designers and racist products

The aforementioned example is but the latest in a long list of products and research that have shown the risks of transmitting patterns of discrimination to the machines or programs developed. On the one hand, programs based on data or reports can contribute to repeating the same behavior.

In 2016, different researchers reported the discriminatory tendency of the Correctional Offender Management Profiling for Alternative Sanctions (Compas) program, a criminal recidivism risk assessment program, towards the black American community. The program, it was reported, was twice as likely to consider a black person a potential recidivist than a white person. Although the specific calculations behind the program are unknown, it showed one basic problem, the program relied on the historical statistics of the U.S. prison population, where the largest percentage of population is black.

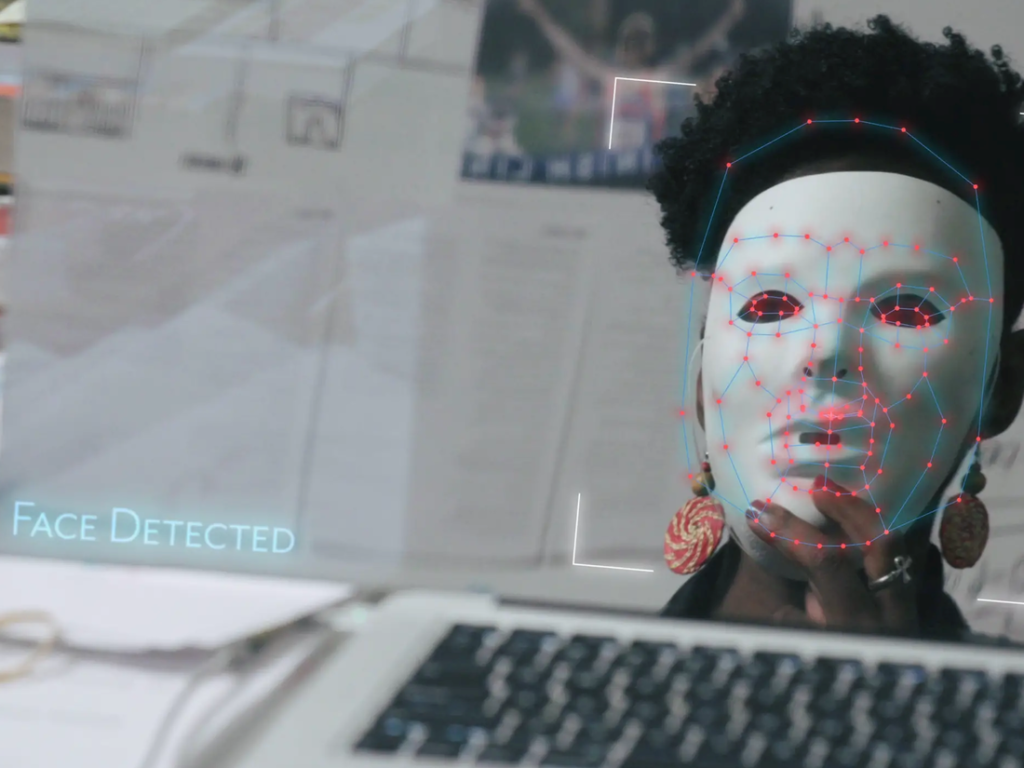

Equally shocking are the multiple complaints against facial recognition machines made in recent years. It was notable the bias shown by the Google Photos application, whose software of classification of people and objects confused the black population with gorillas, as reported in 2015.

In the same vein, a student of the renowned MIT (Massachusetts Institute of Technology), Joy Buolamwini, denounced that same year the problems that facial recognition programs had when recognizing the faces of the black population. After acknowledging that these programs could not recognize her face, as a person of color, Buolamwini put on a white mask, only to find out that her features were identified without difficulty. The problem was compounded when it came to women, as opposed to men. Her study was applied to the artificial intelligence systems of the major technology giants, Amazon, IMB and Microsoft. On average, all three showed a margin of error of less than 1% when the faces analyzed belonged to white males; however, the margin increased to 35% when analyzing the faces of black women. The problem, again, leads us to the databases used, in which the black population and women are underrepresented, i.e., more photographs and data from white male populations are included to help the machines identify the features analyzed.

Underlying this fact is a second problem, which allows us to understand this bias: ten of the largest technology companies in Silicon Valley have no black female employees. Three of them had no employees of color at all. Subconsciously, the designers of these systems reproduce their environment, favoring the representation of the white, male population over that of other minorities.

A similar case went viral several years ago, when a soap vending machine failed to recognize the hand of a black person as opposed to that of his white companion.

A social problem

These examples are not intended to deny the many virtues of technological development and artificial intelligence, but rather to reflect on the absence of neutrality and the risk of reproducing the discriminatory patterns that lie behind this fact. The article only mentions two of the multiple problems faced by these technological systems: the reproduction of discrimination against minority groups, and the reproduction of the lack of representativeness. Both are social problems, and not technological ones, which show some of the shortcomings existing within society. It is inevitable to conclude that it is not the computer systems that need to be corrected, but the societies themselves.

No Comments